The Telltale Signs of AI-Generated Images and Video

Since DALLE-2 was launched in 2022, users of AI-generated image software have created, on average, 34 million images per day. Roughly 15 billion images have been created using AI software since the most famous models, like Midjourney, launched in 2022.

“This explosion of creation becomes a problem when we start being unable to tell real images from fake ones,” says Michael Holmes of VidPros, a professional video editing service. “And it really becomes problematic when we bring politics into the equation. Posting false AI-generated images and videos could convince voters of things that are untrue, which could be counted as electoral fraud.”

In recent weeks, Elon Musk’s AI tool, Grok, stirred controversy by allowing users to easily generate highly realistic, fake images of political figures such as Trump, Harris, and Biden. Users have flooded social media with these AI-generated images, including disturbing and false scenarios like Trump participating in the 9/11 attacks and Kamala Harris robbing a store at gunpoint.

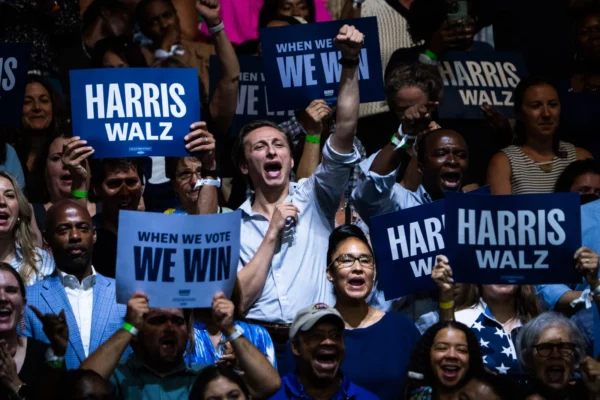

Meanwhile, conspiracy theories have been circulating that images of large crowds at Kamala Harris’ rallies were AI-generated. Trump even accused Harris of “A.I.’ing” her rally photos, but these images have been proven to be real, with the Harris campaign confirming they show actual crowds of 15,000 people at a Michigan event.

In response to these growing concerns about the spread of AI images and the veracity of photos, Michael and his team have put together a list of telltale signs for YCB readers, that users can look out for when trying to decide whether what they’re looking at is AI or not.

Hands, Hands, Hands

Badly generated hands have long been recognized as one of the hallmarks of AI-generated images. Although generators have improved over time, wonky hands with too many fingers or twisted into odd shapes are still a prevalent problem.

“In general, AI image generators have a problem with small details,” Michael says. “They struggle with hands, architecture, and small background details. Have a close look at any image you’re suspicious of, and you might spot these telltale mistakes.”

Skin And Hair

AI isn’t great at fine detail, so pores or individual hairs can get lost or look unnatural. AI also tends to repeat patterns, so a zoomed-in view of an AI-generated person’s hairline or eyebrow might show the exact same pattern of hair repeated again and again. Similarly, AI-generated skin can look plasticky, flat, or ‘photoshopped.’

Words

AI image generators are good at just that – generated images. That’s why they struggle when asked to generate text. Often, AI-generated text will come out with spelling mistakes or different letters in different sizes. Sometimes, it may not even look like any form of human writing at all! “This is often easy to spot if you zoom in and look at the background,” Michael says. “For example, signs and shop windows are often culprits.”

Broken Lines

AI has trouble understanding how shapes work in 3D space or how perspective operates. This is why if a line – like, for example, a door frame or the edge of a desk – goes behind another object and comes out again, the line on the other side won’t match up. AI also struggles to understand how to use perspective on objects that look different from other angles, like aeroplanes. This can make the image look strange and unnaturally placed within its surroundings.

Reflections And Shadows

Similarly to AI’s problems with understanding perspective, it also struggles to understand how shadows and reflections work. Reflections are often simply a flipped version of the original object, not the part that would actually be reflected, while the angle of shadows doesn’t match up with the angle light is coming from in the image.

“When you ask an AI image generator to make a picture, it looks at the keywords you’ve used to prompt it and then goes through its database to find relevant images, then blends them to make the new image,” Michael says. “It doesn’t understand how objects work and interact with each other in 3D space like a human artist would.”

Inappropriate And Out Of Place Items

AI focuses on what has been prompted, sometimes leading it to fill in background details in odd ways. AI doesn’t have a human conception of what items would be appropriate or typical for any particular scene, so it often adds things that look wildly out of place, like generating a picture of a desk with pens sitting in a full cup of coffee. You can transform your photo to Sketch AI instantly; just download the app and upload easily.

“I particularly remember a series of images where the goal was to generate portraits of people speaking at a conference,” Michael says. “While the results were generally impressive, the AI did generate one picture of a female speaker dressed in a bathing suit! But of course, the AI has no idea that’s an inappropriate outfit for a conference.”

Look At The Face

One of the key giveaways for spotting a ‘deepfake’ or an AI-generated video is the face. AI deepfake tech usually swaps a famous person’s face onto another body, so have a look at the edges of the face. Do the skin tones match? Are there odd lines around the face where the skin doesn’t seem to mesh or move correctly? These could be signs of a deepfake.

Michael Holmes from VidPros commented:

“We should be concerned about AI-generated images and videos because they can seriously impact public trust,” Michael explains. “Donald Trump just this week posted AI-generated images of Taylor Swift endorsing his campaign, which could mislead her fans into voting for him based on a false endorsement. This could also potentially cross into electoral fraud.

“Deepfakes also pose significant risks in financial fraud. Last year, scammers used real footage and an AI voice cloner to create a deepfake of Elon Musk, to trick investors into losing thousands of dollars by promoting a fake investment opportunity. The video was so believable that investors had no idea he wasn’t the real thing until their money disappeared.

In the past, photo or video evidence was considered reliable, but that’s no longer the case. We now need to learn to spot fakes and verify information before trusting it. Always research and verify if something seems questionable, and never invest unless you are completely sure.”